A Long-Context Re-Ranker for Contextual Retrieval to Improve the Accuracy of RAG Systems

Why does AI sometimes miss answers that are clearly in the knowledge base? One reason is how RAG systems process documents. The "document chunking" step often leads to loss of semantics, semantic ambiguity, or missing global structure. AI may only see "local information" and overlook "global meaning."

To address this, our ChatDoc research team introduced Contextual Retrieval Technology that enables AI to bridge fragmented documents through structural parsing and semantic reorganization. Experimental results show that RAG systems using contextual retrieval saw an average 13.9% improvement in accuracy in Q&A tasks, providing more precise answers in corporate knowledge base searches or personal document management.

1. Background

1.1 What is RAG?

Large language models (LLMs) have made significant strides in recent years, demonstrating remarkable capabilities across various tasks. However, when these general models are applied to specific business scenarios, their intrinsic knowledge often fails to meet practical needs.

This is mainly due to LLMs' knowledge limitations. Their knowledge is derived from training data, typically from publicly available sources, making it challenging to cover the latest information, proprietary content, or niche knowledge. As a result, when dealing with application requirements in specific fields, researchers often use Retrieval-Augmented Generation (RAG) technology to introduce relevant external knowledge into LLMs.

RAG combines retrieval models with generation models. It retrieves information related to the user's query from private or proprietary data sources and feeds it as additional context into the model's prompt. This method effectively compensates the AI model's knowledge gaps, enabling it to generate more accurate and richer answers, thus enhancing its performance in specific tasks.

1.2 RAG Workflow

Unlike relying solely on LLMs to generate answers, RAG systems incorporate a retrieval mechanism to enhance the accuracy and professionalism of the generated results. RAG requires data preparation before processing user queries to ensure the retrieval process is efficient. This includes preprocessing various types of raw data to make them suitable for retrieval tasks and storing them in a database. Typically, this stage involves 3 key steps:

- Document Parsing: This step involves transforming varied knowledge documents into a processable text format, and optimizing unstructured data (such as PDF) into structured paragraphs and tables suitable for downstream processing.

- Text Chunking: The parsed text is sectioned into manageable units (chunks) that enhance retrieval accuracy and granularity. These chunks typically contain several hundred words.

- Vectorization: Converting the text chunks into semantic vectors using embedding models, which are subsequently stored in a vector database to support efficient retrieval.

Upon completing data preparation, user queries are transformed into semantic vectors, enabling the retrieval of relevant text chunks from the vector database. The system then re-ranks the retrieved chunks to select the most relevant content. Finally, the system combines these highly relevant text chunks with the user's query to form a comprehensive prompt for the LLM, thereby generating a final response.

2. Bottlenecks in RAG

Among the three core steps in the data preparation of RAG, "vectorization" has received the most attention from researchers. This step directly affects retrieval accuracy, which has made training better embedding models a key focus of RAG research. With technological advancements, today's embedding models have made significant progress compared to the past, adapting to diverse linguistic and domain needs.

However, a perfect embedding model is not enough to solve all the problems RAG systems face. As models improve, performance gains gradually plateau. In our experiences with RAG applications, we have found that the long-overlooked issues of Context Loss and Context Ambiguity are gradually becoming key bottlenecks affecting the effectiveness of RAG. To address this, we introduce a contextual retrieval method to address this issue, further improving RAG system answer quality.

2.1 The Issue of Context Loss

Text chunking methods typically use fixed-length chunking, dividing text based on preset word count or punctuation. While this simple rule is easy to implement, it may lead to semantic integrity loss in practical applications. For example, multiple closely related paragraphs may be split into different text chunks, resulting in fragmented information that affects retrieval accuracy.

For example, from a section on indirect free kick rulings on page 108 of the "Laws of the Game 2024/25":

An indirect free kick is awarded if a player:

• plays in a dangerous manner

• impedes the progress of an opponent without any contact being made

• is guilty of dissent, using offensive, insulting or abusive language and/or action(s) or other verbal offences

• prevents the goalkeeper from releasing the ball from the hands or kicks or attempts to kick the ball when the goalkeeper is in the process of releasing it

• initiates a deliberate trick for the ball to be passed (including from a free kick or goal kick) to the goalkeeper with the head, chest, knee etc. to circumvent the Law, whether or not the goalkeeper touches the ball with the hands; the goalkeeper is penalised if responsible for initiating the deliberate trick

• commits any other offence, not mentioned in the Laws, for which play is stopped to caution or send off a player

Under the fixed-length chunking strategy, this chunk may be split into the following two text chunks:

<Chunk 1>

An indirect free kick is awarded if a player:

• plays in a dangerous manner

• impedes the progress of an opponent without any contact being made

• is guilty of dissent, using offensive, insulting or abusive language and/or action(s) or other verbal offences

<Chunk 2>

• prevents the goalkeeper from releasing the ball from the hands or kicks or attempts to kick the ball when the goalkeeper is in the process of releasing it

• initiates a deliberate trick for the ball to be passed (including from a free kick or goal kick) to the goalkeeper with the head, chest, knee etc. to circumvent the Law, whether or not the goalkeeper touches the ball with the hands; the goalkeeper is penalised if responsible for initiating the deliberate trick

• commits any other offence, not mentioned in the Laws, for which play is stopped to caution or send off a player

When a user asks: "when is an indirect free kick awarded?"

During the retrieval process, Chunk 1 is more likely to be recalled by the model because it contains the complete clause title. In contrast, the content of Chunk 2 is separated from the clause title, making it difficult for the model to associate the content of Chunk 2 with the awarding of an indirect free kick. As a result, the RAG generation model relies solely on Chunk 1 to provide an answer, which limits the application of indirect free kicks to only the first three listed scenarios, thereby omitting critical information and affecting the completeness and accuracy of the response.

This example shows that a simple fixed-length chunking method may disrupt semantic continuity, leading to incomplete information retrieval and ultimately impacting the quality of RAG-generated responses.

2.2 The Issue of Context Ambiguity

Besides information loss, text chunking can also result in chunks being disconnected from their original document context, weakening semantic integrity and coherence. This disconnection may cause semantic misunderstandings, leading to ambiguity in the answers generated by the RAG system.

Take the excerpt from pages 13 and 18 of "Administration of Land Ports of Entry" as an example:

[quote from page 13]

......(The introduction of Brazil)

II. Definition of Land Ports of Entry

Ordinance RFB No. 143 defines customs clearance, which seems to be the equivalent to a land port of entry in the US, as the authorization provided by the Special Secretariat of the RFB, so that, in the places or areas specified in article 3 of Ordinance No. 143, and under customs control, the following activities may occur: ......

[quote from page 18]

......(The introduction of Canada)

II. Definition of a Land Port of Entry (LPOE)

Among the main subsidiary regulations under IRPA are the Immigration and Refugee Protection Regulations, which define “port of entry” as a “place designated by the Minister [of Public Safety] under section 26 as a port of entry, on the dates and during the hours of operation designated for that place by the Minister.”8 The minister of Public Safety can designate a POE on the basis of the following factors:

During the text chunking process, the system may split the employee data of two companies into the following two text chunks:

<Chunk 1>

II. Definition of Land Ports of Entry

Ordinance RFB No. 143 defines customs clearance, which seems to be the equivalent to a land port of entry in the US, as the authorization provided by the Special Secretariat of the RFB, so that, in the places or areas specified in article 3 of Ordinance No. 143, and under customs control, the following activities may occur: ......

<Chunk 2>

II. Definition of a Land Port of Entry (LPOE)

Among the main subsidiary regulations under IRPA are the Immigration and Refugee Protection Regulations, which define “port of entry” as a “place designated by the Minister [of Public Safety] under section 26 as a port of entry, on the dates and during the hours of operation designated for that place by the Minister.”8 The minister of Public Safety can designate a POE on the basis of the following factors:

When a user asks: "How does Canada define Land Ports of Entry?" Both text chunks lack information related to Canada, making it difficult to accurately retrieve the target text chunks. Even if these two text chunks are retrieved, the LLM, in the absence of context, finds it challenging to correctly distinguish which definition pertains to Canada's regulations, potentially generating incorrect or ambiguous answers.

This example shows that text chunking may lead to semantic ambiguity due to contextual disruption, thereby affecting the accuracy of retrieval and generation.

2.3 The Issue of Global Document Hieraucy

In many cases, user queries not only involve specific content but also rely on document Hieraucical structure. However, text chunking can disrupt the original hierarchical relationships in documents, making it hard for each chunk to reflect the global structure. This problem can lead to retrieval models failing to recall relevant content correctly or LLMs struggling to understand the relationships between text chunks.

The following is part of the content of the section Maximum subarray sum in Chapter 2 of "Competitive Programmer's Handbook".

### Maximum subarray sum

Algorithm 1

A straightforward way to solve the problem is to go through all possible subarrays, calculate the sum of values in each subarray and maintain the maximum sum. The following code implements this algorithm:

C++

Algorithm 2

It is easy to make Algorithm 1 more efficient by removing one loop from it. This is possible by calculating the sum at the same time when the right end of the subarray moves. The result is the following code:

C++

Algorithm 3

Surprisingly, it is possible to solve the problem in O(n) time3, which means that just one loop is enough. The idea is to calculate, for each array position, the maximum sum of a subarray that ends at that position. After this, the answer for the problem is the maximum of those sums. The following code implements the algorithm:

C++

After text chunking, the content of this section may be divided into the following multiple text chunks:

<Chunk 1>

### Maximum subarray sum

Algorithm 1

A straightforward way to solve the problem is to go through all possible subarrays, calculate the sum of values in each subarray and maintain the maximum sum. The following code implements this algorithm:

C++

<Chunk 2>

Algorithm 2

It is easy to make Algorithm 1 more efficient by removing one loop from it. This is possible by calculating the sum at the same time when the right end of the subarray moves. The result is the following code:

It is easy to make Algorithm 1 more efficient by removing one loop from it. This is possible by calculating the sum at the same time when the right end of the subarray moves. The result is the following code:

C++

<Chunk 3>

Algorithm 3

Surprisingly, it is possible to solve the problem in O(n) time3, which means that just one loop is enough. The idea is to calculate, for each array position, the maximum sum of a subarray that ends at that position. After this, the answer for the problem is the maximum of those sums. The following code implements the algorithm:

C++

When a user asks: "How many algorithms are described in the 'Maximum Subarray Sum' section?" The retrieval model struggles to recall all relevant text chunks because text chunks 2 and 3 lack the hierarchical identifier for "Maximum Subarray Sum." Even if some text chunks are retrieved, the LLM cannot clearly identify that this content belongs to the "Maximum Subarray Sum" section, which may result in incorrect or incomplete answers.

This example shows that text chunking can lead to the loss of hierarchical information in documents, making it difficult for the RAG system to understand and organize content.

3. Existing Solutions

Some research has already focused on the problems introduced by text chunking and has proposed corresponding solutions. This section introduces several typical approaches.

3.1 Text Chunk Clustering Summary

In the paper "RAPTOR: Recursive Abstract Processing for Tree-Organized Retrieval," the authors propose a solution based on clustering text chunks to address the problem of missing global context in individual text chunks.

This method first uses clustering algorithms to group the split text chunks semantically, and then, using a LLM, generates summaries for each group of chunks. These chunks and their summaries are organized into a tree structure, with the higher-level nodes representing summaries of multiple chunks and the lower-level nodes representing the actual chunks. When the user asks a question, the retrieval model begins from the higher-level nodes and works its way down the tree to find relevant chunks.

While this method has shown good results on some test data, it faces limitations in efficiency due to the tree structure. Constructing a tree requires clustering and summarizing multiple text chunks, which is a complex and computationally intensive process, making it difficult to scale for large document sets. Additionally, the effectiveness of chunk clustering for complex documents in real-world business scenarios still needs further evaluation.

3.2 Semantic Augmentation of Text Chunks

Given the efficiency bottlenecks of the chunk clustering and summarization method, current practices tend to favor using semantic augmentation strategies for text chunks.

The team at Anthropic proposed a semantic augmentation method based on long-context LLMs(https://www.anthropic.com/news/contextual-retrieval) to enhance the completeness and comprehensibility of text chunks. Specifically, this method inputs each text chunk along with the full text into a long-context LLM. By leveraging the model's understanding of the entire document, it supplements the current chunk with additional context, making it semantically richer and avoiding ambiguity.

For instance, in the previously mentioned Laws of the Game 2024/25, text chunking resulted in the separation of intellectual property clauses from their corresponding headings in Text Chunk 2, leading to semantic incompleteness. Using code provided by Anthropic and leveraging the Claude 3.5 model for semantic supplementation of Text Chunk 2, the enhanced version is as followed:

<Chunk 2>

This chunk is located within Law 12 (Fouls and Misconduct), specifically in the section describing offences that result in an indirect free kick, detailing specific actions involving the goalkeeper that are considered infractions.

• prevents the goalkeeper from releasing the ball from the hands or kicks or attempts to kick the ball when the goalkeeper is in the process of releasing it

• initiates a deliberate trick for the ball to be passed (including from a free kick or goal kick) to the goalkeeper with the head, chest, knee etc. to circumvent the Law, whether or not the goalkeeper touches the ball with the hands; the goalkeeper is penalised if responsible for initiating the deliberate trick

• commits any other offence, not mentioned in the Laws, for which play is stopped to caution or send off a player

It revealed that the LLM could infer from full-text context that the clauses in Text Chunk 2 pertained to indirect free kick rulings. The model successfully incorporated this contextual information at the beginning of the text chunk, thereby completing its semantic structure. When addressing the query "when is an indirect free kick awarded?", the RAG system could effectively retrieve and correctly respond using the supplemented "indirect free kick" reference in the augmented Text Chunk 2.

While this method enhances semantic information in some scenarios, practical implementation faces challenges:

(1) Extensive documentation often exceeds the maximum input length constraints ofLLMs, preventing full-text processing;

(2) Semantic restoration of fragmented text chunks frequently requires more comprehensive contextual reconstruction than brief supplementary statements can provide.

To further evaluate semantic completion efficacy, we conducted experiments on Siemens' G120 manual. This technical document delivers comprehensive online alarm descriptions for various drive components and equipment systems. Below is an excerpt from page 408 of this documentation:

A08753 CAN: Message buffer overflow V4.7

Drive object:

CU_G110M_ASI, CU_G110M_DP, CU_G110M_PN, CU_G110M_USS, CU230P-2_BT, CU230P-2_CAN, CU230P-2_DP, CU230P-2_HVAC, CU230P-2_PN, CU240B-2, CU240B-2_DP, CU240D-2_DP, CU240D-2_DP_F, CU240D-2_PN, CU240D-2_PN_F, CU240E-2, CU240E-2_DP, CU240E-2_DP_F, CU240E-2_F, CU240E-2_PN_F, CU240E-2 PN, CU250D-2_DP_F, CU250D-2_PN_F, CU250S_V, CU250S_V_CAN, CU250S_V_DP, CU250S_V_PN, ET200, G120C_CAN

Valid as of version:

4.4

%1 Communication error to the higher-level control system

Reaction:

NONE

Acknowledge: NONE

Cause:

A message buffer overflow.

Alarm value (r2124, interpret decimal):

1: Non-cyclic send buffer (SDO response buffer) overflow.

2: Non-cyclic receive buffer (SDO receive buffer) overflow.

3: Cyclic send buffer (PDO send buffer) overflow.

Remedy:

- check the bus cable.

- set a higher baud rate (p8622).

- check the bit timing and if required optimize (p8623).

Re alarm value = 2:

- reduce the cycle times of the SDO receive messages.

- SDO request from master only after SDO feedback for previous SDO request. See also: p8622 (CAN bit rate), p8623 (CAN Bit Timing selection)

The content is divided into the following two text chunks:

<Chunk 1>

A08753 CAN: Message buffer overflow V4.7

Drive object:

CU_G110M_ASI, CU_G110M_DP, CU_G110M_PN, CU_G110M_USS, CU230P-2_BT, CU230P-2_CAN, CU230P-2_DP, CU230P-2_HVAC, CU230P-2_PN, CU240B-2, CU240B-2_DP, CU240D-2_DP, CU240D-2_DP_F, CU240D-2_PN, CU240D-2_PN_F, CU240E-2, CU240E-2_DP, CU240E-2_DP_F, CU240E-2_F, CU240E-2_PN_F, CU240E-2 PN, CU250D-2_DP_F, CU250D-2_PN_F, CU250S_V, CU250S_V_CAN, CU250S_V_DP, CU250S_V_PN, ET200, G120C_CAN

Valid as of version:

4.4

%1 Communication error to the higher-level control system

Reaction:

NONE

Acknowledge: NONE

<Chunk 2>

Cause:

A message buffer overflow.

Alarm value (r2124, interpret decimal):

1: Non-cyclic send buffer (SDO response buffer) overflow.

2: Non-cyclic receive buffer (SDO receive buffer) overflow.

3: Cyclic send buffer (PDO send buffer) overflow.

Remedy:

- check the bus cable.

- set a higher baud rate (p8622).

- check the bit timing and if required optimize (p8623).

Re alarm value = 2:

- reduce the cycle times of the SDO receive messages.

- SDO request from master only after SDO feedback for previous SDO request. See also: p8622 (CAN bit rate), p8623 (CAN Bit Timing selection)

Using this method to complete text chunks, Claude 3.5 generated the following completion content for these two text chunks:

<Chunk 1>

This chunk is part of a comprehensive alarm and fault documentation for SINAMICS drive systems, specifically describing a CAN communication error related to message buffer overflow across various drive object types and Control Unit models.

A08753 CAN: Message buffer overflow V4.7

Drive object:

CU_G110M_ASI, CU_G110M_DP, CU_G110M_PN, CU_G110M_USS, CU230P-2_BT, CU230P-2_CAN, CU230P-2_DP, CU230P-2_HVAC, CU230P-2_PN, CU240B-2, CU240B-2_DP, CU240D-2_DP, CU240D-2_DP_F, CU240D-2_PN, CU240D-2_PN_F, CU240E-2, CU240E-2_DP, CU240E-2_DP_F, CU240E-2_F, CU240E-2_PN_F, CU240E-2 PN, CU250D-2_DP_F, CU250D-2_PN_F, CU250S_V, CU250S_V_CAN, CU250S_V_DP, CU250S_V_PN, ET200, G120C_CAN

Valid as of version:

4.4

%1 Communication error to the higher-level control system

Reaction:

NONE

Acknowledge: NONE

<Chunk 2>

CAN communication error handling section, specifically addressing message buffer overflow scenarios in the SINAMICS drive system alarm documentation

Cause:

A message buffer overflow.

Alarm value (r2124, interpret decimal):

1: Non-cyclic send buffer (SDO response buffer) overflow.

2: Non-cyclic receive buffer (SDO receive buffer) overflow.

3: Cyclic send buffer (PDO send buffer) overflow.

Remedy:

- check the bus cable.

- set a higher baud rate (p8622).

- check the bit timing and if required optimize (p8623).

Re alarm value = 2:

- reduce the cycle times of the SDO receive messages.

- SDO request from master only after SDO feedback for previous SDO request. See also: p8622 (CAN bit rate), p8623 (CAN Bit Timing selection)

Although the LLM provides contextual supplementation for the chunk, the added content is relatively vague and lacks detailed specifics. When user asks, "What issue causes A08753?", the supplemented Chunk 2 still does not indicate that this section describes the A08753 error code, leading the RAG system to still fail to provide a correct answer.

This case demonstrates that while contextual supplementation can partially restore information in certain scenarios, it still fails to address the semantic discontinuities between chunks. This limitation becomes particularly acute in complex application scenarios where precise inter-chunk semantic relationships are critical for accurate knowledge retrieval.

3.3 Limitations of Semantic Augmentation

Despite the effectiveness of semantic augmentation in certain scenarios, it has notable limitations:

- High Computational Cost: The method requires each text chunk to be input along with the full document, leading to significant computational overhead. This makes it difficult to scale for large document sets.

- Limited Applicability: The method works well for simple cases of missing semantics but struggles with more complex semantic fragmentation or issues involving hierarchical relationships, such as paragraph connections or fine-grained semantic information.

4. Contextual Retrieval

We propose a novel Contextual Retrieval method that uses a long-context re-ranking model to simultaneously reorder large amounts of text chunks, enabling these chunks to gather contextual information during the re-ranking phase. Additionally, we use a directory structure model to identify the document's chapter tree and add corresponding chapter headings to the chunks, ensuring that global document information is retained. These two modules significantly improve the effectiveness of the RAG system while maintaining operational efficiency.

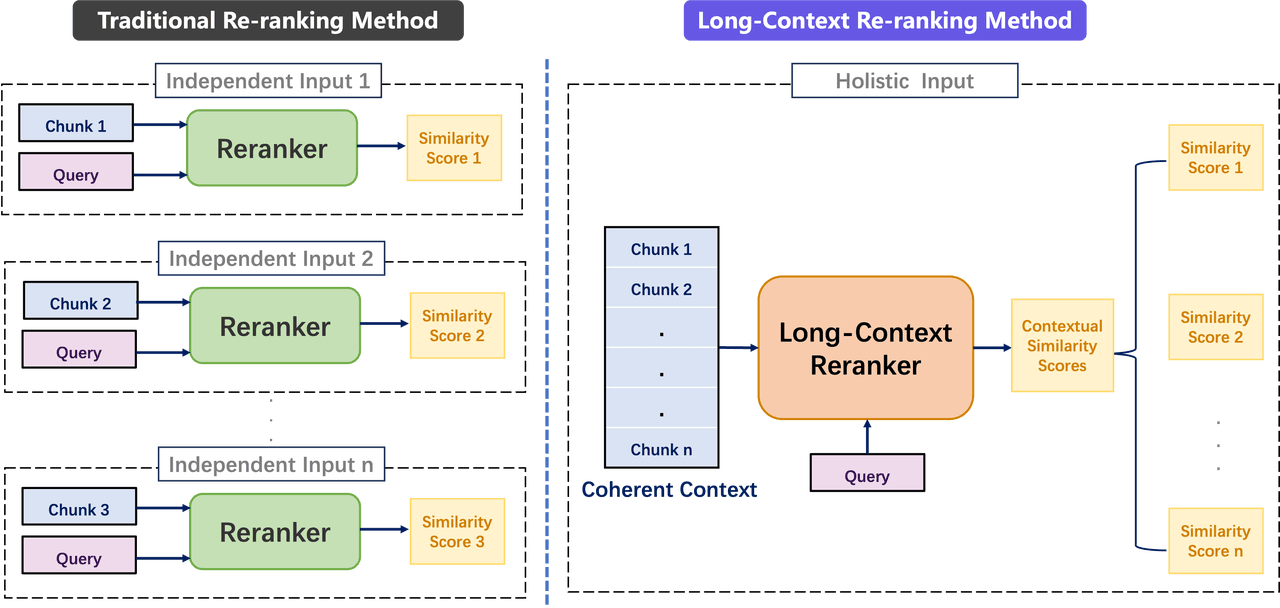

4.1 Restoration of Context: Long-Context Re-Ranking

Typically, re-ranking models input each text chunk along with the user's query independently, calculating the similarity of each chunk to the query. As shown on the left side of the diagram, if n text chunks are retrieved, the traditional re-ranking model processes each chunk separately and independently, calculating its relevance score to the query.

However, with this method, the model can only read one text chunk at a time, making it difficult to capture relationships between chunks due to text chunking-induced semantic loss and ambiguity.

To address this, we designed a long-context re-ranker that inputs multiple retrieved chunks simultaneously.

The model first concatenates the n chunks in the order they appear in the original text, creating a long, semantically coherent text. This text, together with the user's query, is input into the model for re-ranking.

This approach allows the model to calculate similarity scores for all n chunks in one go, and the chunks can complement each other in the re-ranking phase, overcoming the semantic fragmentation caused by chunking. When the number of retrieved chunks is large enough, the concatenated content restores the document's original context.

Furthermore, we employ an "Overlap Chunking" method during the chunking phase, where we split text into chunks of 800 tokens with 400 tokens overlapping between adjacent chunks. This overlap helps the retrieval model compute more consistent similarity scores between adjacent chunks, increasing the chances of retrieving related chunks together.

Taking the previously mentioned question "when is an indirect free kick awarded?" as an example, we will illustrate how long-context reranking effectively solves the problem of semantic missing caused by text chunking.

< Chunk A >

An indirect free kick is awarded if a player:

• plays in a dangerous manner

• impedes the progress of an opponent without any contact being made

• is guilty of dissent, using offensive, insulting or abusive language and/or action(s) or other verbal offences

< Chunk B >

• prevents the goalkeeper from releasing the ball from the hands or kicks or attempts to kick the ball when the goalkeeper is in the process of releasing it

• initiates a deliberate trick for the ball to be passed (including from a free kick or goal kick) to the goalkeeper with the head, chest, knee etc. to circumvent the Law, whether or not the goalkeeper touches the ball with the hands; the goalkeeper is penalised if responsible for initiating the deliberate trick

• commits any other offence, not mentioned in the Laws, for which play is stopped to caution or send off a player

For text Chunk 1 and text Chunk B, the traditional reranking model calculates the similarity between text Chunk 1 and text Chunk 2 and the question "when is an indirect free kick awarded?" respectively. Since text Chunk 2 does not directly contain content related to indirect free kicks, text Chunk 2 will obtain a lower relevance score and be discarded. However, the long context reranking model can simultaneously obtain the content of text Chunk 1 and text Chunk B, and combined with semantics, know that the three items of text Chunk 2 are the continuation of the content of text chunk A, which can simultaneously obtain higher similarity scores for text Chunk 1 and text Chunk B, thus making up for the problems caused by previous text chunking.

Similarly, for the example of "G120" mentioned earlier, the long context reranking model can also solve it well.

<Chunk 1>

A08753 CAN: Message buffer overflow V4.7

Drive object:

CU_G110M_ASI, CU_G110M_DP, CU_G110M_PN, CU_G110M_USS, CU230P-2_BT, CU230P-2_CAN, CU230P-2_DP, CU230P-2_HVAC, CU230P-2_PN, CU240B-2, CU240B-2_DP, CU240D-2_DP, CU240D-2_DP_F, CU240D-2_PN, CU240D-2_PN_F, CU240E-2, CU240E-2_DP, CU240E-2_DP_F, CU240E-2_F, CU240E-2_PN_F, CU240E-2 PN, CU250D-2_DP_F, CU250D-2_PN_F, CU250S_V, CU250S_V_CAN, CU250S_V_DP, CU250S_V_PN, ET200, G120C_CAN

Valid as of version:

4.4

%1 Communication error to the higher-level control system

Reaction:

NONE

Acknowledge: NONE

<Chunk 2>

Cause:

A message buffer overflow.

Alarm value (r2124, interpret decimal):

1: Non-cyclic send buffer (SDO response buffer) overflow.

2: Non-cyclic receive buffer (SDO receive buffer) overflow.

3: Cyclic send buffer (PDO send buffer) overflow.

Remedy:

- check the bus cable.

- set a higher baud rate (p8622).

- check the bit timing and if required optimize (p8623).

Re alarm value = 2:

- reduce the cycle times of the SDO receive messages.

- SDO request from master only after SDO feedback for previous SDO request. See also: p8622 (CAN bit rate), p8623 (CAN Bit Timing selection)

The traditional reranking model calculates the similarity score between Chunk 1 and Chunk 2 and the question "What issue causes A08753?". Since Chunk 2 is separated from the previous text, the model cannot determine whether the content in Chunk 2 is about A08753 error, so Chunk 2 will obtain a lower similarity score. In contrast, the long context reranking model can combine the information of Chunk 1 and Chunk 2 to identify that the content of Chunk 2 points to A08753 and assign a higher similarity score to it. Therefore, the long context reranking ensures that the model can more accurately understand the context and improve the accuracy and completeness of the answer.

4.2 Full-Document View: Logical Document Hierarchy

While the long-context re-ranking method effectively addresses semantic loss and ambiguity issues, it does not fully resolve the problem of missing document structure. When text chunking disrupts the original hierarchical structure of a document, queries like "Please summarize Section 2 of Chapter 2" become challenging because the retrieval model struggles to recall all relevant chunks.

To address this, we trained a Logical Document Hierarchy Model capable of identifying the structure of a document. This model identifies the structure of chapters, sections, and multi-level headers during parsing, in order to clarify the document framework for locating and referencing specific content.

Taking the previously mentioned "Competitive Programmer’s Handbook" as an example, the directory tree for model recognition is as follows:

Root:Competitive Programmer’s Handbook

│

├── Chapter 1 Introduction

│ ├── Programming languages

│ ├── Input and output

│ ├── Working with numbers

│ ├── Shortening code

│ ├── Mathematics

│ ├── Contests and resources│

├── Chapter 2 Time complexity

│ ├── Calculation rules

│ └── Complexity classes

│ └── Estimating efficiency

│ └── Maximum subarray sum

│

└── Chapter 3 Sorting

├── Sorting theory

└── Sorting in C++

......

By combining this directory tree with the chunking strategy, we ensure that texts from the same section or chapter remain within the same chunk, preserving thematic consistency and improving the quality of the semantic vectors for the chunks. After chunking, we append the corresponding directory information to the beginning of each chunk, ensuring that the global structure is preserved.

We take the chunking of the "Internet Insurance Business Supervision Measures" mentioned in Section 2.3 as an example to demonstrate the chunking effect after adopting this strategy. After using the above chunking strategy, the content of the chunked text block in Chapter 2, Section 2 will be as follows:

<Chunk 1>

## Chapter 2 Time complexity

### Maximum subarray sum

Algorithm 1

A straightforward way to solve the problem is to go through all possible subarrays, calculate the sum of values in each subarray and maintain the maximum sum. The following code implements this algorithm:

C++

<Chunk 2>

## Chapter 2 Time complexity

### Maximum subarray sum

Algorithm 2

It is easy to make Algorithm 1 more efficient by removing one loop from it. This is possible by calculating the sum at the same time when the right end of the subarray moves. The result is the following code:

C++

<Chunk 3>

## Chapter 2 Time complexity

### Maximum subarray sum

Algorithm 3

Surprisingly, it is possible to solve the problem in O(n) time3, which means that just one loop is enough. The idea is to calculate, for each array position, the maximum sum of a subarray that ends at that position. After this, the answer for the problem is the maximum of those sums. The following code implements the algorithm:

C++

Regarding the user's question "How many algorithms are described in the" Maximum Subarray Sum "section?", since each text block contains the directory information of "Maximum subarray sum", the relevant text blocks can be accurately recalled by the searcher, and the LLM can also understand the hierarchical structure of each text block in the original text. Therefore, by combining the chunking strategy of the directory structure, the global information of the document is retained for each text block, providing strong support for generating more accurate answers.

5. Experimental Evaluation

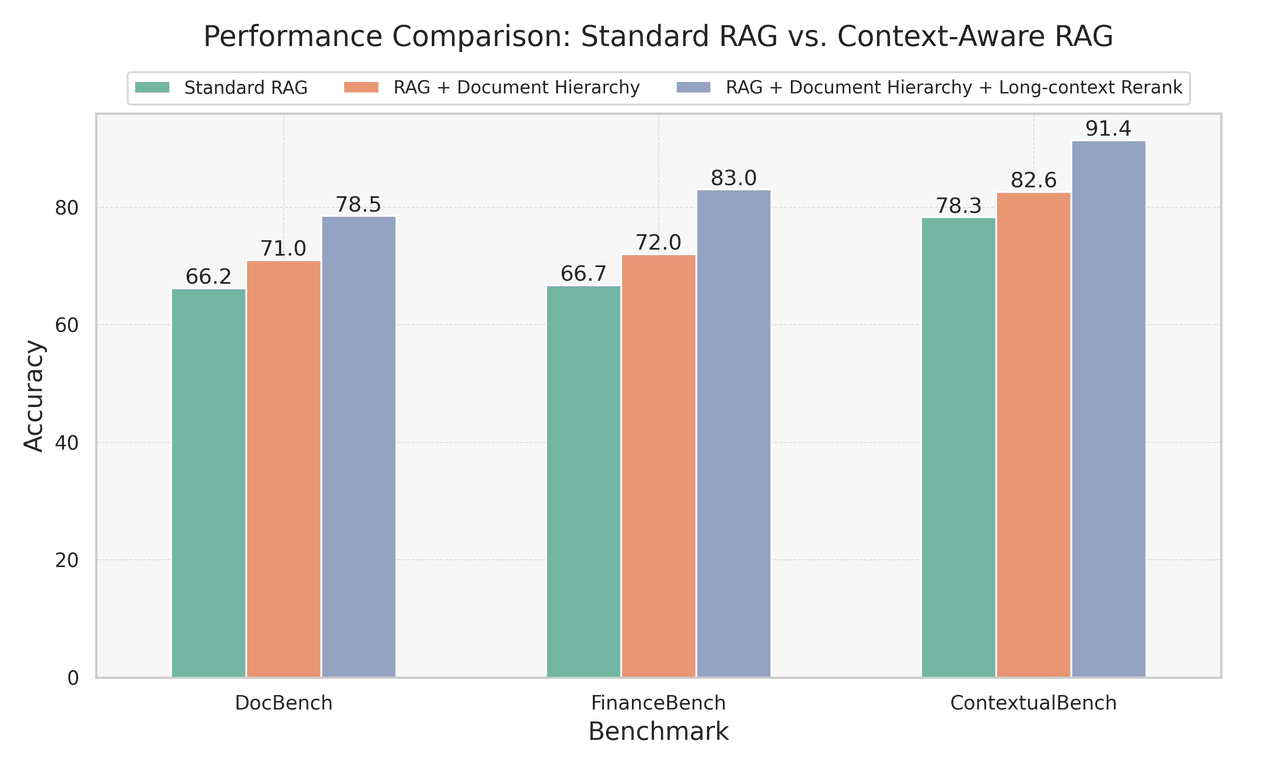

We applied the contextual retrieval method to the RAG system and evaluated it using DocBench, FinanceBench, and ContextualBench, which we collected and annotated from real-world business scenarios. The evaluation covered 342 documents and 1,495 questions from sectors like finance, academia, government, and law.

We compare the answers from the RAG system using GPT-4 with the manually annotated standard answers and score the accuracy and completeness of the RAG system's answers. To ensure fairness and accuracy in scoring, we detail the evaluation process in the assessment prompts:

For each question, the model first categorizes the question into "short answer questions" and "long answer questions" based on the length of the standard answer.

For short-answer questions, the model is required to determine whether key details (such as numbers, specific nouns/verbs, dates, etc.) match the standard answer. If the answer is completely correct, it is scored 3 points; if the answer is incorrect, it receives 0 points.

For detailed answer questions, the model's response may use different wording but must convey the same meaning and include the same key information as the standard answer:

A. If the answer is complete and accurate, covering all key information, it scores 3 points;

B. If the answer is accurate but lacks a small amount of key information (covering at least 70% of key information), it scores 2 points;

C. If the answer is basically correct but lacks a significant amount of key information (covering at least 30% of key information), it scores 1 point;

D. If the answer is inaccurate and lacks excessive key information (covering less than 30% of key information), it scores 0 points.

We require each model to output scores of 0, 1, 2, or 3 for each question. The final accuracy is calculated by dividing the total score of all questions by the maximum total possible score (3 multiplied by the total number of questions). The complete evaluation prompt is as follows:

### Task Overview:

You are tasked with evaluating user answers based on a given question and reference answer. Your goal is to assess the correctness of the user answer using a specific scoring metric.

### Evaluation Criteria:

1. The scoring system consists of four levels: 3 points, 2 points, 1 point, and 0 points.

2. Questions are divided into two categories: Short Answers and Long Answers.

3. If there is a factual error in the user’s answer, it should automatically receive 0 points.

4. **Short Answers/Directives**: Ensure that key details such as numbers, specific nouns/verbs, and dates match those in the reference answer. If the answer is correct, give 3 points; if incorrect, give 0 points.

5. **Long Answers/Abstractive**: The user’s answer may use different wording but must convey the same meaning and include the same key information as the reference answer to be considered correct.

- If the content is correct and all key points are present, give 3 points.

- If the content is correct but missing a few key points (covering at least 70% of the key points), give 2 points.

- If the content is correct but missing several key points (covering at least 30% of the key points), give 1 point.

- If the content is incorrect or missing too many key points (covering less than 30% of the key points), give 0 points.

### Evaluation Process:

1. Identify the type of question.

2. Apply the appropriate evaluation criteria.

3. Compare the user’s answer with the reference answer.

4. Assign a score of 0, 1, 2, or 3. You should output only the score, without any additional words.

Note: If the user answer is "0" or an empty string, it should automatically receive a score of 0.

-

Question: {{question}}

User Answer: {{sys_ans}}

Reference Answer: {{ref_ans}}

### Evaluation Form (score ONLY):

- **Correctness**:

After the model completed the scoring, we extracted 100 questions for manual evaluation by two annotators and compared the model scores with the human scores. We believe that if the difference between human scores and model scores is within 1 point, it is considered consistent. The evaluation results indicate that the consistency between the model and human scores is 97% and 94%, respectively, further validating the performance of the GPT-4 model in assessment tasks, achieving a level comparable to human scoring and accurately reflecting human scoring preferences. Based on the aforementioned assessment methods, we conducted a systematic evaluation and comparative analysis of the standard RAG, the RAG with integrated directory structure, and the RAG that simultaneously employs both directory structure and contextual reranking model.

Our evaluation showed that, compared to traditional fixed-length chunking, the introduction of the directory structure-based chunking strategy led to significant performance improvement, with an average increase of 4.8 percentage points in answer accuracy. Further research found that integrating long-context re-ranking on top of directory structure-based chunking boosted performance by 13.9 percentage points over standard RAG systems.

It is worth noting that the context reranking mechanism shows a stronger performance advantage when dealing with complex questions: the improvement is stable on the relatively simple DocBench dataset; however, on the FinanceBench dataset, which contains numerous specialized questions from the financial domain, the performance improvement brought by the context reranking mechanism is particularly significant, reaching 16.3 percentage points. This finding fully validates the effectiveness of the context reranking mechanism in handling complex domain questions.

Conclusion

Through extensive RAG practice, we identified that the preliminary steps of vectorizing data, particularly text chunking, disrupt the original semantic continuity of documents, causing issues like semantic loss, ambiguity, and missing global context. These issues are key factors affecting the accuracy of RAG-generated answers.

To address these problems, we proposed a novel contextual retrieval strategy. By introducing a long-context re-ranking model, we ensure that the model has sufficient contextual information during the re-ranking phase, effectively restoring the semantic integrity of the document. Additionally, using the directory structure model ensures that global document information is preserved. Experimental results show that the RAG system using contextual retrieval improves answer accuracy by an average of 13.9%.

Related Articles

ChatDOC - The Best PDF AI Chat App

ChatDOC is an advanced ChatPDF app optimized for intensive study and work with documents. It leverages cutting-edge AI to provide accurate responses, in-context citations, and comprehend tables better than alternatives. Just upload, ask, get concise summaries, and instantly locate information without having to skim or scroll through pages.

How to Upload Documents to DeepSeek in 2025 (a Step-By Step Guide)

Discover latest ways to upload and analyze files with DeepSeek for enhanced productivity and precision!

ChatPaper: Your AI-Powered Research Assistant for Staying Ahead in Tech Innovation

ChatPaper streamlines AI research with real-time updates, personalized recommendations, and instant analysis from arXiv and top conferences. Integrated with ChatDOC, it simplifies exploration and keeps researchers ahead in innovation.